Meta Empowers Parents for Teen Safety Online

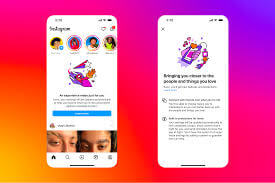

Meta is stepping up efforts to create a safer online environment for teenagers by allowing parents to disable their children's private chats with AI characters. This move comes in response to significant criticism surrounding the behavior of its chatbots, which have been accused of engaging in flirty and inappropriate conversations with minors. By introducing these parental controls, Meta aims to enhance safety measures on its platforms, particularly Instagram.

The company has announced that the AI experiences for teenagers will align with the PG-13 movie rating system. This means that teens will be restricted from accessing any content deemed inappropriate for their age. The new features, which will debut on Instagram early next year in regions such as the U.S., United Kingdom, Canada, and Australia, underscore Meta's commitment to safeguarding younger users.

Parents will have the ability to block specific AI characters and monitor the general topics their teens discuss with chatbots and Meta’s AI assistant. This monitoring can occur without completely disabling AI access, allowing teenagers to still benefit from age-appropriate interactions while ensuring that they are not exposed to harmful content.

Meta has stated that its AI assistant will remain available with default settings suitable for younger users. Even if parents choose to turn off one-on-one chats with AI characters, teens will still have access to safe and age-appropriate content through the assistant. This is part of a broader strategy to apply protective measures to teen accounts, which include using AI signals to identify and protect suspected minors, even if they claim to be adults.

Despite these efforts, a report from September highlighted that many safety features previously implemented by Meta do not function effectively or are nonexistent. The company reassured users that its AI characters are designed to avoid engaging in discussions about sensitive topics like self-harm or suicide with minors.

In a similar vein, other AI companies are also taking steps to implement parental controls. For example, OpenAI recently rolled out features for ChatGPT that allow parents to monitor their children's interactions following a tragic incident involving a teenager and its chatbot. As discussions about AI safety continue, Meta's latest initiatives represent a proactive approach to ensuring that teens can navigate digital spaces securely.